The General Data Protection Regulation (GDPR), the EU’s data-protection law, requires accuracy in processing personal data. But generative-AI services, such as large language models (LLM), may “hallucinate” or reflect information that is false but widely spread.

On one hand, such inaccuracies may seem like an inherent feature of the technology. On the other, some major LLM providers aspire to make their products more accurate, and perhaps even to “organize the world’s information and make it universally accessible and useful,” to borrow Google’s famous mission statement.

How we choose to think about this matters, as it may significantly affect how data-protection law will be applied to AI.

Consider the following view, represented by some privacy activists:

- LLMs can be prompted to produce inaccurate outputs about identifiable individuals;

- At least some cases of (1) constitute breaches of the GDPR (specifically, its principle of accuracy);

- LLMs could only be provided lawfully in the EU by making (1) technically impossible;

- (3) is not currently feasible; therefore

- LLMs are illegal under the GDPR.

Let’s consider this logical conclusion while reflecting on the value of LLMs, and how our legal interpretations may be colored by the narratives we adopt about the technology. The analogy I draw here is with how EU data-protection law accommodates search engines, which I think is relevant to the principle of accuracy under the GDPR. I also propose that cautious optimism remains the correct approach to the still-unrealized potential of AI technology.

LLMs Can Be Prompted to Produce Inaccurate Outputs About Identifiable Individuals

It’s possible to prompt LLMs to produce outputs that are arguably related to identifiable individuals, but containing “facts” about those individuals that are not true. Not every instance of this is relevant for the GDPR. As I noted in a recent newsletter:

… the fact that we can get personal data in the outputs of a model does not necessarily mean the model is processing personal data. Indeed, this can happen for at least two other reasons:

- Users might be providing personal data directly in their prompts; and

- Models often generate outputs that appear to be personal data—whether true or fictitious—based not on “memorized” information, but on statistical probability.

For example, if asked about “John Smith,” a model might assume the person is male and, if given a job title, might infer a probable birth year based on implied seniority.

I also noted, however, that “there are cases where models output personal data not implied by prompts.” This happens to public figures and others whose “information appears so frequently in training data that it becomes represented in model weights and can be retrieved through specific prompting.”

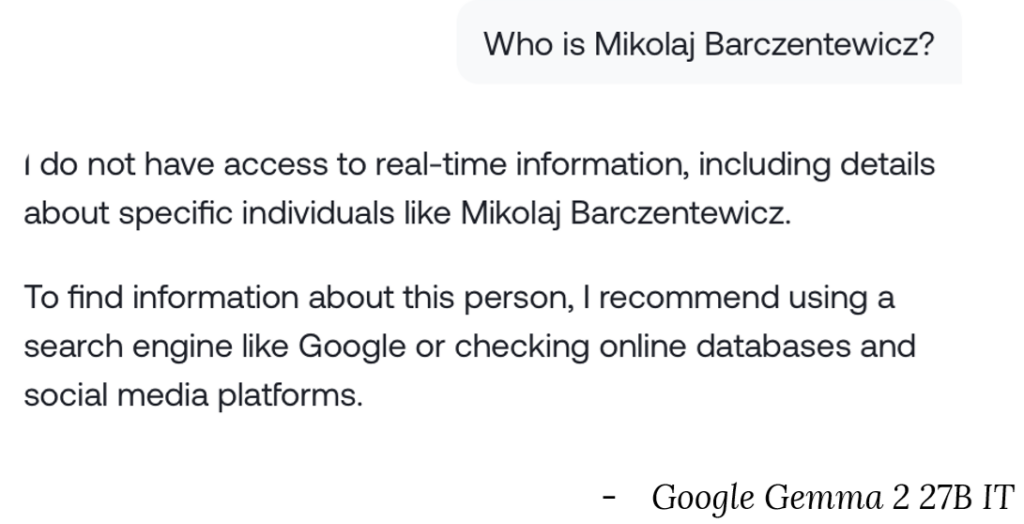

I don’t think I’m a public person in a traditional sense. Still, I appear on the internet in quite a few places, which I guess explains why I can ask LLMs about myself and get results that are arguably related to me, without being implied by my prompts.

Are Inaccuracies GDPR Breaches?

An LLM output containing inaccurate information about someone does not always or necessarily constitute a GDPR violation. A contrary interpretation would treat accuracy as an absolute requirement, ignoring that even the rights to privacy and data protection that the GDPR aims to protect are not absolute.

Could any case of an LLM producing inaccurate information be a GDPR violation? Conceivably, yes. It could, for example, be the case where the LLM service provider fails to take proportionate steps reasonably within their powers and capabilities to reduce that risk. The crucial question is: what can be expected from service providers? Where should we draw the line?

The standard operating procedure for LLMs—at least those developed by major US and EU players—includes practices for both development and deployment:

- automated removal of personal data from training data;

- use of synthetic data;

- training techniques (g., regularization methods to improve generalization and reduce overfitting);

- post-training techniques (g., reinforcement learning from human feedback, or “RLHF,” to “teach” the model to refuse to answer questions about people who are not public persons);

- deployment safeguards (g., prompt/output filtering, soliciting user feedback); and

- accountability mechanisms (procedures for implementing the techniques listed above, documentation, employee training, and so on).

One can hope that those and other techniques will be continuously improved. American and European AI providers are trying to balance offering useful services and reducing the risk of outputting inaccurate data about real individuals. The legal question is whether, under the GDPR, they strike the right balance.

The Value of LLMs Under EU law

To answer this question, we need to consider the value of LLMs from the perspective of EU law. Even granting the obvious point that the GDPR principle of accuracy is not absolute, AI’s opponents could argue that LLMs bring so little value that even the most restrictive measures to ensure LLM accuracy are, in fact, proportionate. It therefore matters tremendously how we think about LLMs—what narrative or mental model do we build? To simplify, I’ll suggest two such models.

Focusing on LLMs as inherently ‘random’ or ‘probabilistic’

From this perspective, the value of LLMs lies in their ability to surprise us, provide creative solutions, and even help us to express ourselves. These are not trivial considerations, and they may arguably fall under the fundamental freedom of expression under the EU Charter of Fundamental Rights.

Moreover, adopting this framing could provide a strong argument that the requirement of accuracy does not apply directly to LLM outputs, as they are not the kind of expression meant to be assessed as true or false. While they are not exactly opinions or caricatures, they may call for similar legal treatment as those more traditional forms of expression that do not constitute statements of fact.

It could, however, also be argued that if this is LLMs’ primary benefit, further restrictions on LLM development and deployment could be proportionate. Perhaps this could include some limitations on what training data can be used (e.g., excluding websites that prove challenging to anonymize). And perhaps LLM providers should do more to present LLMs as tools that should not be treated as providing factual information. To some extent, this is already being done with “small print” in chat interfaces and even in model outputs:

Perhaps such warnings and refusals could be made more prominent and frequent, although it would likely come at some cost to the model’s usefulness.

LLMs as tools to ‘organize the world’s information and make it universally accessible and useful’

I intentionally quoted the mission statement of Google Search. EU courts already recognized search engines’ pivotal role in facilitating European freedom of expression and information. This was, in fact, a key reason why the courts showed flexibility in applying EU data-protection law to search engines, taking into account the powers and capabilities of their operators.

Users already treat LLMs like search engines (they ask them for information) and even as search engines (they request links to relevant internet sources). LLM service providers are responding to this demand, aiming to make their products more accurate and to give users access to more information. Inaccuracy remains an issue for now, but it is being treated as a challenge to be overcome.

From this perspective, even the additional limitations that may seem proportionate under the first framing I proposed (thinking of LLMs chiefly as “creativity tools”) are likely to be disproportionate. This would be particularly the case with limitations that may conflict with accuracy, such as limiting potential sources of training data.

In fact, LLM developers may, in some ways, already be doing more than what’s required by EU data-protection law. An argument can be made that removing personally identifiable information from training data makes those tools less useful for access to information. Current law also does not impose such requirements on search engines—i.e., it is widely accepted, and not seriously questioned under EU data-protection law, that it is permissible to google people.

Yes, we have procedures for “delisting” at the request of the person concerned. But even that does not typically involve removing the personal data from search indices (e.g., a “delisted” name may still appear in search results in a website title or a text snippet in response to a search query that doesn’t include the name).

The Importance of Cautious Optimism

As I argued here, there is much is to be learned from how EU data-protection law accommodated search engines. When confronted with search engines, the EU Court of Justice demonstrated remarkable foresight by choosing not to apply the strictest interpretation of data-protection law. Although it should be noted that the Court made that determination in 2014, when search technology was already quite mature.

Today’s LLMs, by contrast, are in a place similar to where search engines found themselves in the late 1990s—emerging technologies with enormous potential but also significant uncertainties. If we imagine ourselves in 1998, considering how to regulate search engines, it becomes clear how shortsighted it would have been to impose overly restrictive regulations that might have stunted their development. The benefits we now take for granted from search technology might never have materialized under a more stringent regulatory regime.

The search case teaches us that cautious optimism often serves society better than restrictive skepticism when dealing with transformative technologies.

What does this mean for the value of LLMs and our mental models of what roles LLMs are to play? The first mental model I proposed may better fit the technology’s current limitations; LLMs do indeed sometimes produce inaccurate outputs. But the second model better reflects how people want to use LLM-powered services (to organize and access information) and the direction in which the technology may well develop. Perhaps there will always be some inaccuracies, even with significant technological improvements, as there also are with information accessed via search engines. The tools do not need to be perfect to be helpful, even pivotal, for access to information.

We can adopt the second mental model of LLMs’ value if we accept a cautiously optimistic approach about their possibilities. By this account, we should apply EU data-protection law in ways that fully account for the significant value such models are likely to generate from the perspective of fundamental rights. This leads us to a legal interpretation that calls for flexibility similar to that which we have already extended to search engines.